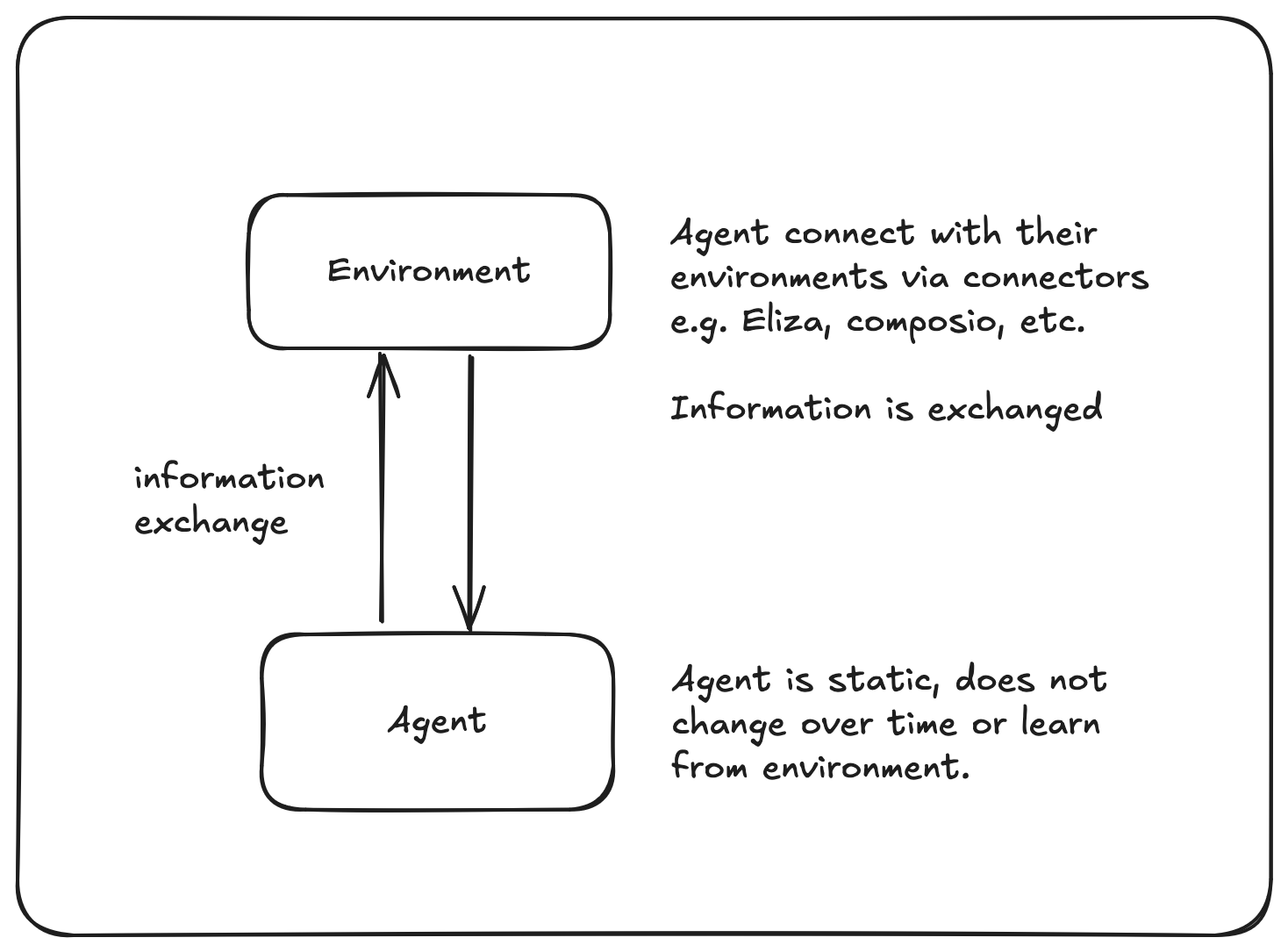

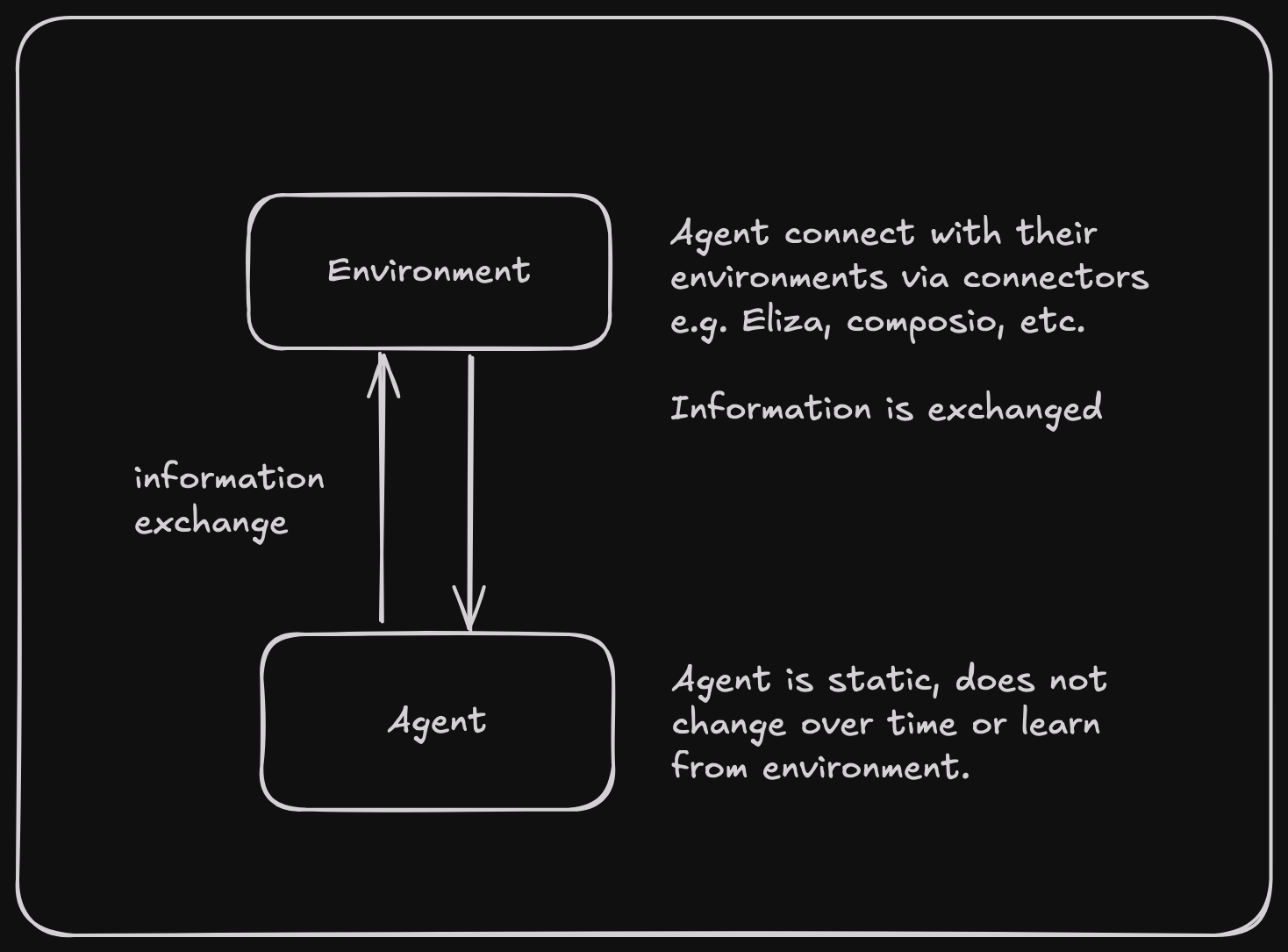

Skip to main contentBasic Agent-Environment Interaction (Stage 1)

The first diagram illustrates the simplest form of AI agent interaction:

- Static Agent: A basic AI agent with fixed behavior and capabilities

- Environment Connectors: Integration points (e.g., Eliza, Composio) that connect the agent to various environments

- Information Exchange: Simple back-and-forth communication between agent and environment

- Limitations: Agent remains static and cannot learn from or adapt to the environment

This represents the current state of most AI agents - capable of interaction but unable to evolve or improve over time.

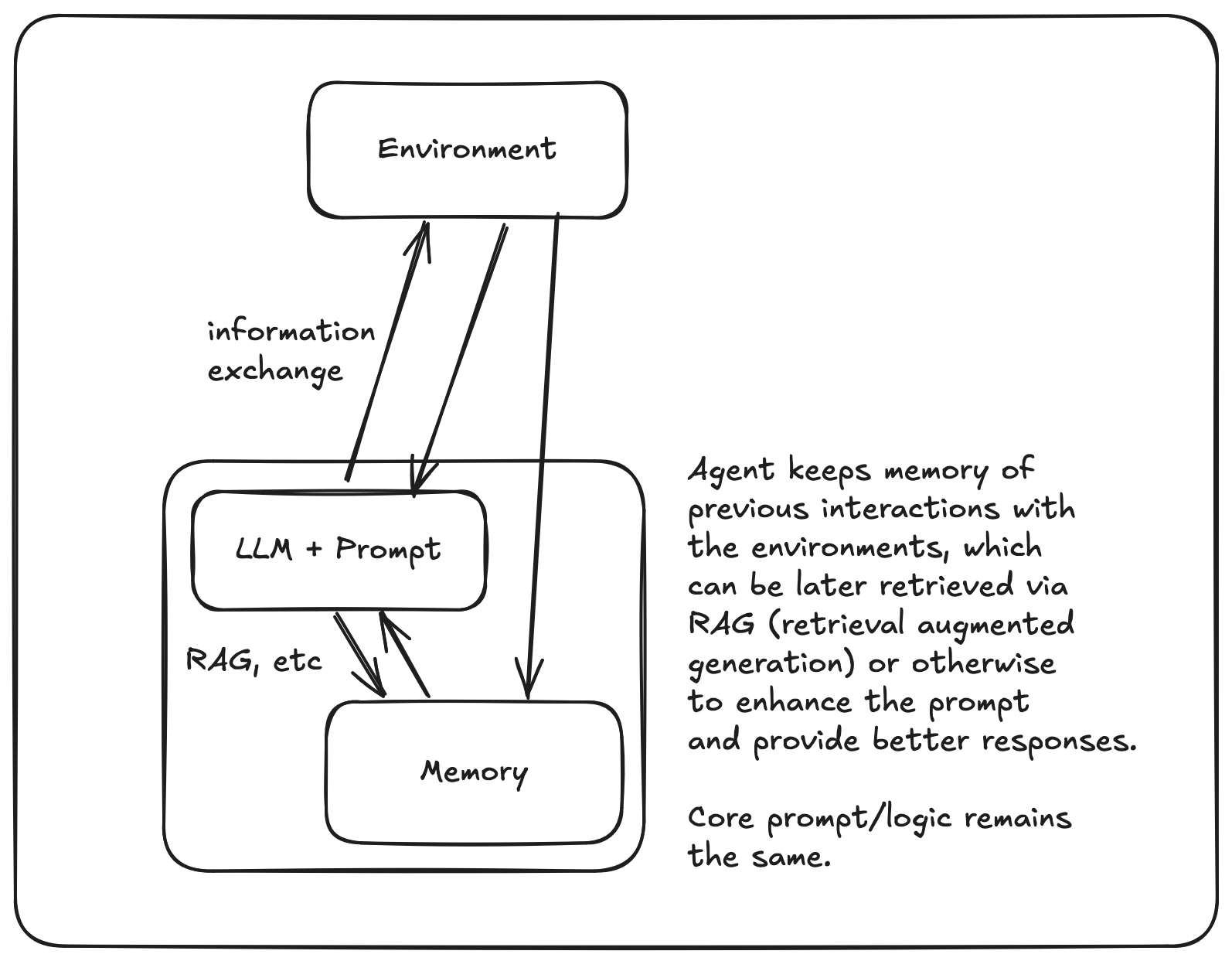

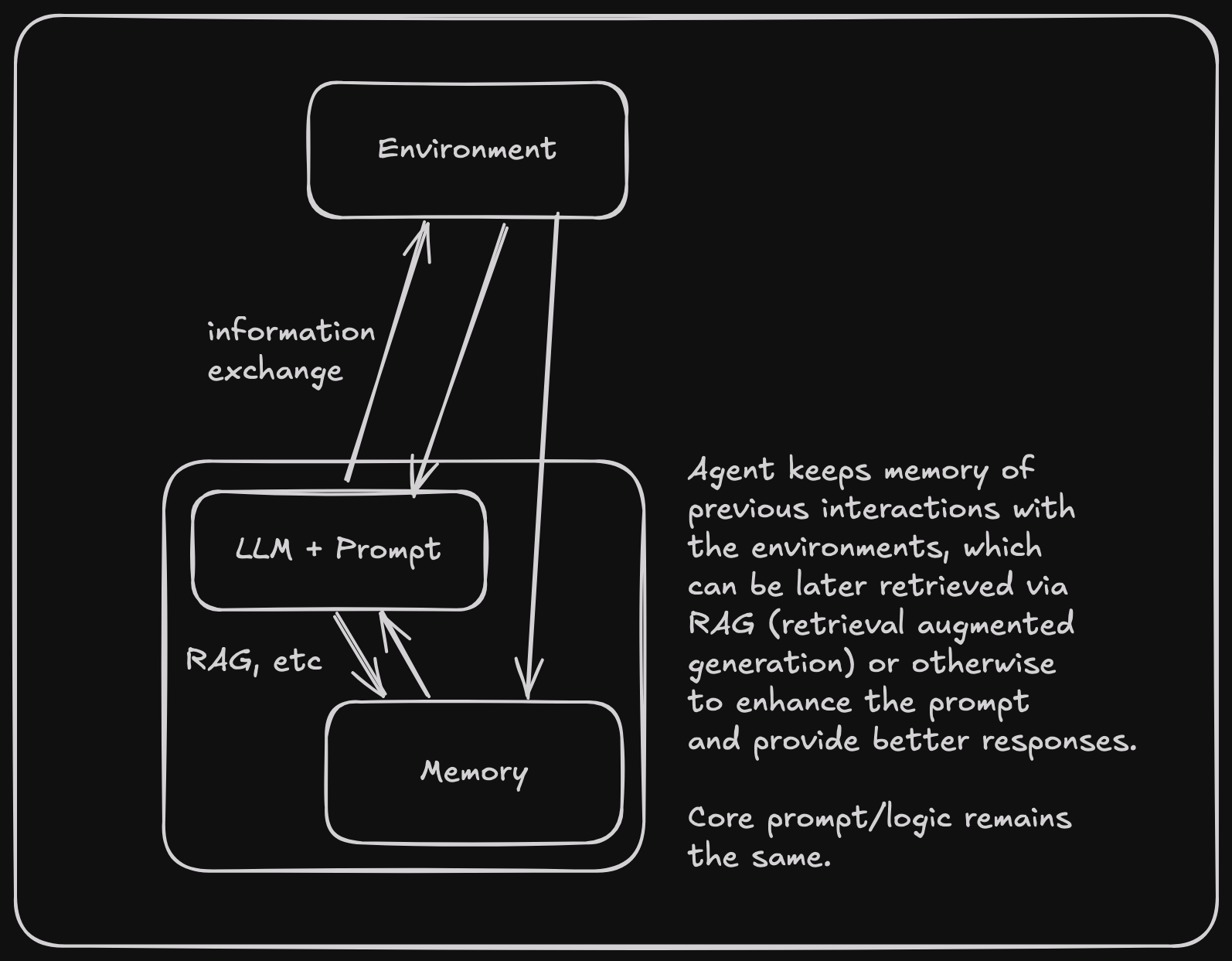

Memory-Enhanced Agent (Stage 2)

The second diagram introduces memory capabilities:

- LLM + Prompt System: Core agent functionality powered by language models

- Memory Storage: Retains information from past interactions

- Enhanced Responses: Uses stored memory (via RAG or similar) to provide context-aware responses

- Still Limited: While memory improves responses, the core prompt and logic remain static

This represents an improvement over Stage 1, but the agent still cannot fundamentally improve its capabilities.

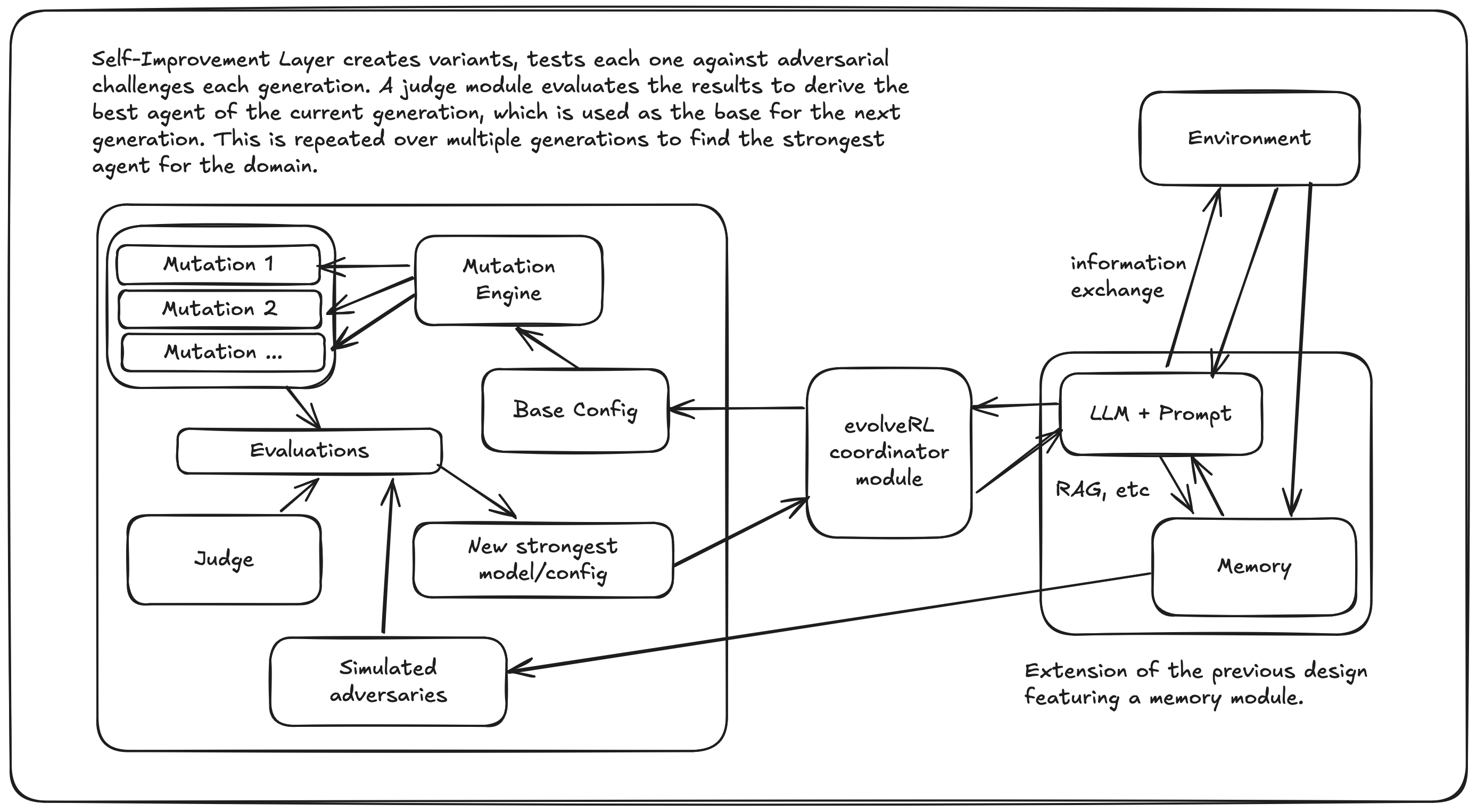

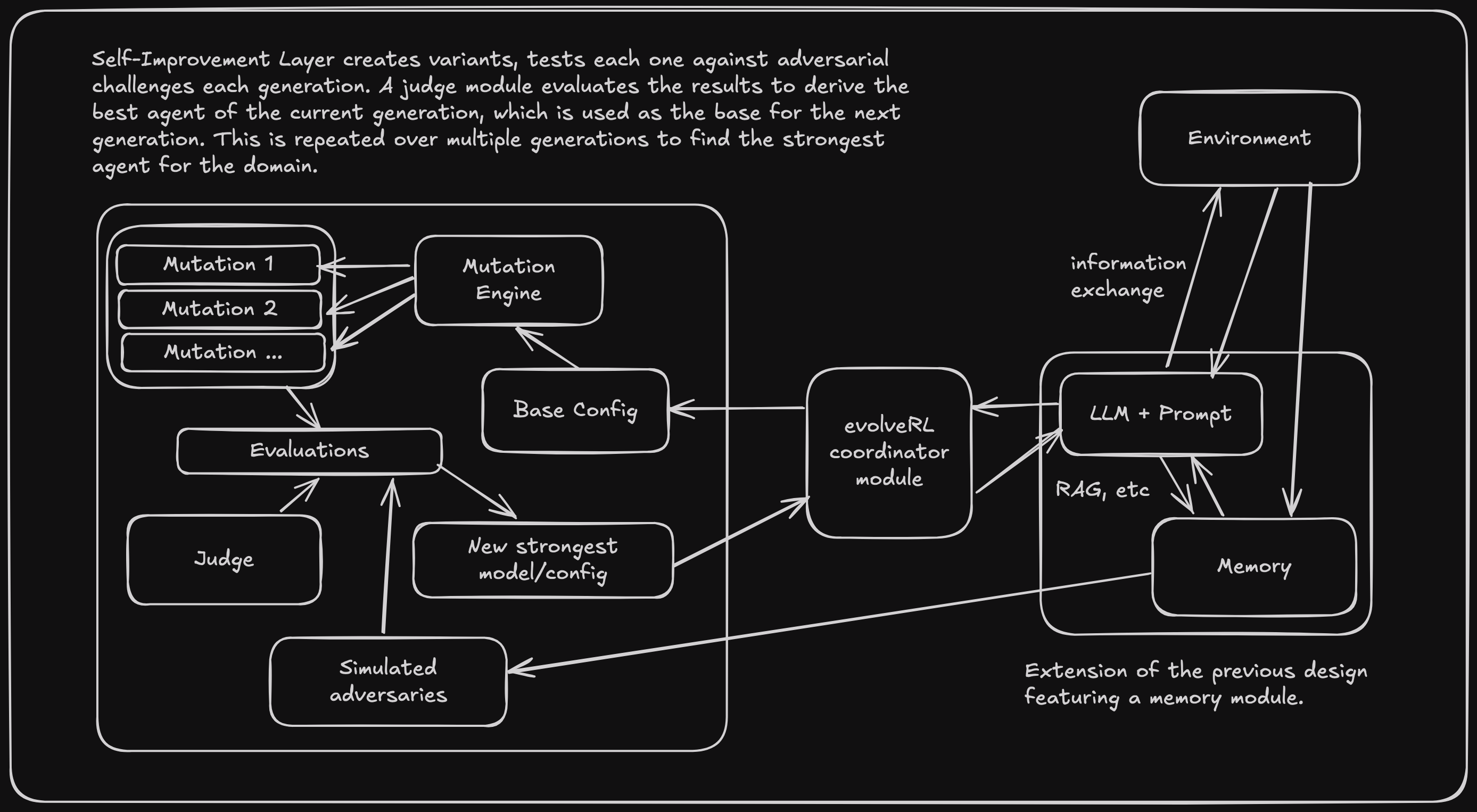

evolveRL Full System (Stage 3)

The third diagram shows the complete evolveRL system, adding self-improvement capabilities:

- Base Agent System: Maintains memory and environment interaction from Stage 2

- Evolution Coordinator: New module that manages the self-improvement process

- Self-Improvement Layer: Contains three key components:

- Variant Generator: Creates mutations of the agent with different characteristics

- Adversarial Simulator: Uses stored memory to create challenging test scenarios

- Performance Judge: Evaluates how variants perform against adversaries

The evolution process works as follows:

- Agent interacts with environment and stores experiences in memory

- Evolution coordinator periodically triggers improvement cycles

- Variant generator creates multiple versions of the agent

- Adversarial simulator creates test scenarios based on past interactions

- Judge evaluates each variant’s performance

- Best-performing variants become the new base agent

- Process repeats over multiple generations

This system enables true self-improvement, allowing agents to:

- Learn from past experiences

- Adapt to new challenges

- Evolve better strategies

- Continuously improve performance

The key innovation is the addition of the evolution layer, which transforms a static or memory-enhanced agent into one capable of systematic self-improvement through evolutionary pressure.